With the advent of ChatGPT, the impact on the tertiary education sector from generative artificial intelligence (GenAI) has been brought to the fore and, along with it, considerable uncertainty about how GenAI will affect academic assessment and integrity.

In a recent interview, Microsoft cofounder and philanthropist Bill Gates referred to AI advancements as the foremost innovation of our era, one that “will change our world”.

Universities seem likely to benefit from GenAI in ways such as assistance with marking, commentary on assignments, customised learning experiences and automation of administrative tasks such as answering frequently asked questions.

- Collection: AI transformers like ChatGPT are here, so what next?

- Spotlight: Artificial intelligence and assessment in higher education

- Assessment design that supports authentic learning (and discourages cheating)

On the other hand, GenAI also presents challenges to universities because students might misuse it to complete their assessments. GenAI, such as AI writer ChatGPT, which was launched in November 2022, can easily provide answers to assessment types such as multiple-choice questions, short-answer questions, true/false questions, open-ended questions, mathematics problems, programming assignments, essay writing and even content creation for presentation slides.

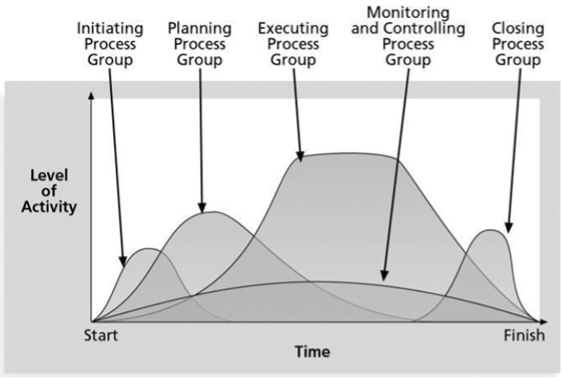

Some academics think that if they use tables or figures in their assignments or exams, students won’t be able to use ChatGPT to produce answers.

So, in order to understand ChatGPT’s functionalities, I fed it a table containing raw data. ChatGPT was able to respond to questions about it as well as draw flowcharts and mind maps. The AI writer also calculated simple parameters such as mean and variance and was able to run complicated analytics techniques on the data.

ChatGPT can research a specific topic and show the results in a table. For example, you can ask ChatGPT to find three projects similar to a project you are working on and draw a table to show year completed, private or government status, link to the website and project location.

I used an example to see if ChatGPT could interpret a figure. I fed ChatGPT a line graph (below) and included the prompt: In one paragraph, write a thorough interpretation of the figure below in terms of the fluctuation of the activity level and project time.

Here is the response ChatGPT provided, which doesn’t seem bad at all (ChatGPT by Open AI accessed on 8 March 2023).

Students’ access to GenAI will make their responses to many types of assessments highly questionable. This is especially the case when students complete these assessments outside class time or have access to computers during the assessment.

The question here is whether universities should ban GenAI. And, if so, are they able to do this?

The answer to both questions is no. Even if universities banned GenAI on their networks, students could still use GenAI platforms at home or on other networks.

What universities need to do urgently is review the GenAI technology and its implications for their institutes, formulate relevant policies for its use, train staff and students and, more importantly, reform the assessment system used in their subjects.

Recommended assessment types to mitigate GenAI use

These assessment types can help universities to minimise the adverse effects of GenAI:

- Staged assignments: Break the assignment into stages and ask students to submit a report for each stage; these reports are reviewed and commented on by the instructor. Students work on later stages as per comments received on earlier stages.

- In-class presentations followed by questions: Ask students to give presentations in front of the class; the lecturer then asks students questions about different parts of their presentations. Note that students could still use GenAI to generate slide content for them, but by requiring students to present in front of the class and asking random questions, the instructor can genuinely evaluate students’ learning.

- Group projects: Students are less likely to cheat if they work in groups. Task students with a project that interests them in a group format. They write a project report as well as present the project outcome. Note that individual students might be able to use GenAI to find answers to parts of the project allocated to them, but through asking follow-up questions during the project presentation the instructor can evaluate students’ learning.

- Personal reflection essays: Students write about what they have learned about a specific topic and how they could apply this knowledge in their future careers. This will make it difficult to use GenAI to cheat because it needs comprehension of a large volume of subject material.

- Class discussion: Provide a topic to the class and have each student present their opinion about the topic or comment on each other’s comments. In face-to-face classes where the use of laptops or mobile phones is not allowed during the discussion, this will make it almost impossible for students to use GenAI to produce responses.

- In-class handwritten exams: This type of assessment should be done in class without access to computers. By cutting students’ access to computers, laptops and mobile phones during the exam, students are not able to use GenAI to cheat.

- Performance-based assessments: Disciplines such as music, dance, visual arts and architecture would be more suited to this type of assessment.

The arrival of GenAI calls for innovative assessment approaches. The need for such a reform is urgent, and failure to act on it could pose a major threat to any university subject that has been run since 30 November 2022.

So, while platforms using GenAI such as ChatGPT hold great potential to transform higher education, universities must address several challenges posed by this technology with care in order to optimise its benefits and minimise associated risks such as threats to academic integrity.

Amir Ghapanchi is a senior lecturer and course chair in the College of Sport, Health and Engineering at Victoria University, Australia.

If you would like advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the Campus newsletter.

comment1

(No subject)